In the past several months we have been deluged with headlines about new AI resources and how considerably they are heading to modify culture.

Some reporters have done astounding operate keeping the businesses creating AI accountable, but numerous wrestle to report on this new technological know-how in a reasonable and precise way.

We—an investigative reporter, a info journalist, and a computer system scientist—have firsthand expertise investigating AI. We’ve found the remarkable prospective these resources can have—but also their large hazards.

As their adoption grows, we think that, soon adequate, quite a few reporters will come across AI resources on their beat, so we wished to set together a limited information to what we have discovered.

So we’ll start out with a easy explanation of what they are.

In the past, desktops were being basically rule-dependent systems: if a unique affliction A is contented, then carry out operation B. But machine understanding (a subset of AI) is diverse. In its place of subsequent a established of guidelines, we can use desktops to acknowledge styles in details.

For instance, offered adequate labeled photographs (hundreds of hundreds or even tens of millions) of cats and dogs, we can teach certain computer system methods to distinguish involving illustrations or photos of the two species.

This process, recognised as supervised finding out, can be done in lots of techniques. A person of the most prevalent techniques utilised just lately is named neural networks. But though the specifics vary, supervised learning applications are primarily all just personal computers mastering patterns from labeled knowledge.

Equally, a person of the techniques utilised to make recent types like ChatGPT is known as self-supervised studying, wherever the labels are generated routinely.

Be skeptical of PR hype

People today in the tech marketplace normally assert they are the only individuals who can comprehend and demonstrate AI versions and their impact. But reporters should be skeptical of these claims, primarily when coming from organization officials or spokespeople.

“Reporters have a tendency to just decide whatsoever the writer or the model producer has explained,” Abeba Birhane, an AI researcher and senior fellow at the Mozilla Basis, mentioned. “They just close up getting to be a PR equipment themselves for those applications.”

In our examination of AI information, we identified that this was a typical concern. Birhane and Emily Bender, a computational linguist at the College of Washington, recommend that reporters talk to area gurus exterior the tech industry and not just give a platform to AI vendors hyping their individual technological innovation. For occasion, Bender recalled that she read a tale quoting an AI vendor claiming their resource would revolutionize mental wellness treatment. “It’s apparent that the people who have the know-how about that are people today who know a little something about how remedy works,” she said.

In the Dallas Morning News’s series of tales on Social Sentinel, the company consistently claimed its product could detect students at danger of harming them selves or other individuals from their posts on well-liked social media platforms and built outlandish claims about the overall performance of their model. But when reporters talked to experts, they figured out that reliably predicting suicidal ideation from a one submit on social media is not possible.

Many editors could also choose superior visuals and headlines, reported Margaret Mitchell, chief ethics scientist of the AI corporation Hugging Face, explained. Inaccurate headlines about AI typically affect lawmakers and regulation, which Mitchell and other individuals then have to attempt to repair.

“If you just see headline immediately after headline that are these overstated or even incorrect statements, then that’s your perception of what’s true,” Mitchell explained. “You are creating the issue that your journalists are seeking to report on.”

Problem the training details

After the design is “trained” with the labeled facts, it is evaluated on an unseen information established, known as the check or validation established, and scored working with some sort of metric.

The to start with action when analyzing an AI product is to see how a great deal and what kind of info the model has been qualified on. The model can only carry out properly in the real world if the instruction details represents the population it is becoming tested on. For instance, if builders experienced a product on 10 thousand photos of puppies and fried hen, and then evaluated it utilizing a image of a salmon, it probably would not do nicely. Reporters should really be wary when a design trained for one goal is made use of for a entirely unique objective.

In 2017, Amazon scientists scrapped a machine discovering model made use of to filter via résumés, soon after they learned it discriminated versus ladies. The offender? Their schooling details, which consisted of the résumés of the company’s earlier hires, who have been predominantly gentlemen.

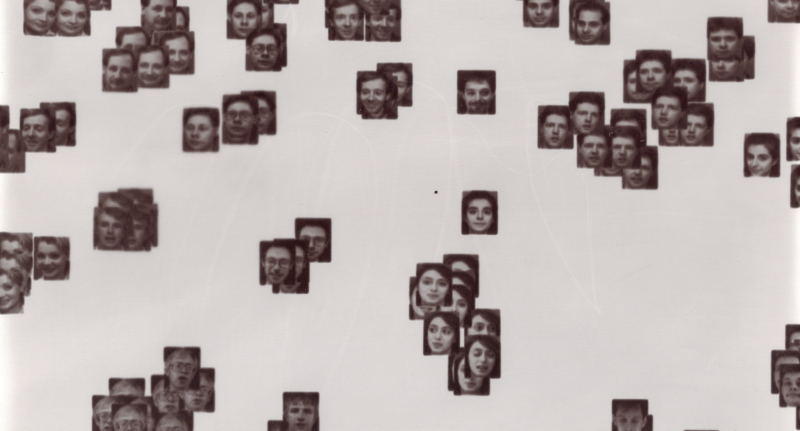

Data privateness is a further issue. In 2019, IBM released a info established with the faces of a million men and women. The subsequent 12 months a team of plaintiffs sued the organization for like their photos devoid of consent.

Nicholas Diakopoulos, a professor of conversation studies and computer system science at Northwestern, recommends that journalists question AI organizations about their information selection methods and if subjects gave their consent.

Reporters ought to also think about the company’s labor practices. Before this calendar year, Time journal documented that OpenAI paid Kenyan staff $2 an hour for labeling offensive material applied to teach ChatGPT. Bender stated these harms really should not be dismissed.

“There’s a tendency in all of this discourse to in essence believe that all of the likely of the upside and dismiss the real documented draw back,” she stated.

Evaluate the model

The remaining phase in the machine understanding method is for the design to output a guess on the tests information and for that output to be scored. Generally, if the model achieves a excellent enough score, it is deployed.

Businesses striving to promote their versions routinely estimate figures like “95 % accuracy.” Reporters ought to dig further in this article and question if the substantial score only arrives from a holdout sample of the unique knowledge or if the product was checked with practical examples. These scores are only valid if the tests information matches the serious globe. Mitchell suggests that reporters talk to certain concerns like “How does this generalize in context?” “Was the design analyzed ‘in the wild’ or outdoors of its domains?”

It’s also critical for journalists to question what metric the company is utilizing to assess the model—and whether that is the proper 1 to use. A practical concern to contemplate is no matter if a false optimistic or bogus damaging is worse. For instance, in a most cancers screening instrument, a phony good may well consequence in people obtaining an pointless examination, when a wrong negative may well result in missing a tumor in its early stage when it is treatable.

The distinction in metrics can be crucial to establish issues of fairness in the model. In Might 2016, ProPublica published an investigation in an algorithm known as COMPAS, which aimed to forecast a legal defendant’s risk of committing a crime inside two yrs. The reporters uncovered that, inspite of owning identical precision between Black and white defendants, the algorithm experienced 2 times as quite a few false positives for Black defendants as for white defendants.

The report ignited a fierce debate in the tutorial local community more than competing definitions of fairness. Journalists need to specify which variation of fairness is employed to appraise a product.

Not long ago, AI developers have claimed their products accomplish effectively not only on a single task but in a selection of circumstances. “One of the points that’s going on with AI appropriate now is that the organizations manufacturing it are boasting that these are fundamentally anything devices,” Bender said. “You can not test that declare.”

In the absence of any actual-planet validation, journalists need to not believe that the company’s statements.

Contemplate downstream harms

As crucial as it is to know how these equipment function, the most critical point for journalists to contemplate is what impact the engineering is acquiring on people today now. Organizations like to boast about the optimistic results of their resources, so journalists really should don’t forget to probe the actual-world harms the device could enable.

AI versions not functioning as advertised is a popular dilemma, and has led to quite a few applications staying abandoned in the earlier. But by that time, the injury is frequently performed. Epic, one particular of the premier healthcare know-how firms in the US, released an AI device to predict sepsis in 2016. The instrument was utilized across hundreds of US hospitals—without any independent exterior validation. At last, in 2021, scientists at the University of Michigan analyzed the tool and located that it labored a lot more badly than marketed. Following a sequence of follow-up investigations by Stat Information, a 12 months later on, Epic stopped promoting its a single-dimension-matches-all resource.

Ethical challenges occur even if a device performs perfectly. Confront recognition can be made use of to unlock our telephones, but it has now been used by organizations and governments to surveil persons at scale. It has been utilised to bar folks from entering live performance venues, to detect ethnic minorities, and to check workers and people dwelling in general public housing, often without having their know-how.

In March reporters at Lighthouse Reviews and Wired revealed an investigation into a welfare fraud detection model used by authorities in Rotterdam. The investigation discovered that the software routinely discriminated versus ladies and non–Dutch speakers, often major to hugely intrusive raids of innocent people’s houses by fraud controllers. Upon evaluation of the product and the teaching knowledge, the reporters also identified that the product done minimal much better than random guessing.

“It is a lot more get the job done to go obtain staff who were exploited or artists whose details has been stolen or scholars like me who are skeptical,” Bender mentioned.

Jonathan Stray, a senior scientist at the Berkeley Middle for Human-Suitable AI and previous AP editor, stated that speaking to the people who are working with or are afflicted by the tools is nearly normally value it.

“Find the people who are essentially employing it or hoping to use it to do their get the job done and go over that tale, mainly because there are actual persons attempting to get true points done,” he said.

“That’s the place you’re heading to uncover out what the actuality is.”

Sayash Kapoor, Hilke Schellmann and Ari Sen are, respectively, a Princeton College pc science Ph.D. applicant, a journalism professor at New York University, and a computational journalist at the Dallas Morning News. Hilke and Ari are AI accountability fellows at the Pulitzer Middle.